Overview

While the term R-A-I-D comes from Redundant Array of Independent (originally Inexpensive) Disks, the words "recoverable" and "replicable" are more accurate than "redundant".

Historically storage devices have been disk drives. In embedded applications these can be any type storage device (NAND, NOR, SD), and, given the system sizes, storage is rarely “independent”. But the standard terminology remains RAID. Instead of disk or drive, the more relevant term, especially for embedded systems, is “partition”.

A partition is a section of a storage device that is treated, by the operating system, as if it were a separate device. Both RAID1 and RAID5 store data over multiple partitions so the original data can be recovered or replicated in the event of a storage drive failure.

Key features

- Increases usable storage capacity versus RAID 1

- Provides protection from storage device defects/failures

- Works on the storage layer with both FAT and EFS file systems

- Can be used with any storage device supported by emFile (such as NAND, NOR, and SD cards)

- Can use different storage types for partitions

- Can locate all partitions on the same storage device

RAID 5 – Theory of operation

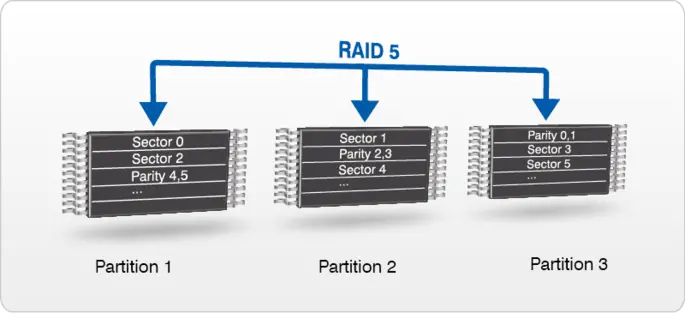

RAID5 requires at least three storage partitions. Storage space equivalent to the size of one partition is used for parity check information, making it unavailable for data storage. Parity checking means adding an extra piece of information (a formula result) that can be used to replicate data in the case of a hardware defect or failure. The storage capacity required for the parity checking information can be reduced by increasing the number of partitions, thus increasing overall available capacity. The RAID5 add-on calculates the parity checking information using the “eXclusive Or” function (XOR). The result of an XOR function performed on any number of inputs is such that if one input is lost (i.e. one partition fails) the missing piece of information can be calculated (i.e. recovered or replicated) using the XOR result and the other known inputs.

RAID 5 versus RAID 1

RAID1 uses mirroring to enable data recovery. A copy of all data on the master partition is kept on a separate partition called the mirror. The master and the mirror may be on the same storage device or on separate devices. In the case of a hardware defect or failure on the master partition, data can be recovered from the mirror partition.

RAID5 distributes data over several partitions and uses parity checking to enable data recovery. Saving a parity check, rather than a full copy of the data, reduces the amount of storage required to recreate original data if necessary.

RAID5 uses less data storage to achieve the same data security as RAID1. This can be a crucial advantage in a resource-constrained environment. RAID1 is slightly faster than RAID5 because fewer functions are performed to achieve data security.

RAID with SEGGER’s NAND driver

SEGGER’s in-house, proprietary, Universal NAND Flash Driver is best-in-class in terms of speed, efficiency, reliability, and data protection. The driver has been carefully optimized to use as little RAM and ROM as possible while maintaining high performance.

It can correct single and multiple bit errors using either the hardware ECC (error-correcting code) build into NAND flash or software ECC routines provided by the emLib ECC library. The NAND drivers utilize active and passive wear leveling, bad-block management, and are fail-safe. The RAID add-on provides an extra level of precaution to an already robust system. RAID maximizes data integrity and reliability.

SEGGER’s NAND Driver and RAID together would work to avoid a data loss when an uncorrectable bit error occurs during a read operation. In case of an uncorrectable bit error the Universal NAND driver requests the RAID 5 add-on to provide corrected sector data from the other partitions. This procedure applies to read requests coming from the file system as well as for read operations performed internally by the Universal NAND driver when the data of a NAND block is copied to another location.

Sector data synchronization

An unexpected reset that interrupts a write operation may cause data inconsistency. It is possible that only the data of the last written sector is updated on the storage device while the parity information is not. After restart, the file system will continue to operate correctly but in the case of a read error affecting either this sector or any sector in the same stripe the RAID5 component will not be able to recover the data that in turn may cause a data corruption. This situation can be prevented by performing a synchronization of all the sectors on the RAID5 volume. The application can perform the synchronization operation by calling the FS_STORAGE_SyncSectors() API function. This operation can be executed either at the file system initialization or in parallel with the normal file system activities, for example in a low priority task.

Sample configuration

The following code snippet shows how to configure a the RAID 5 add-on that uses three partitions located on the same NAND flash device.

/*********************************************************************

*

* Defines, configurable

*

**********************************************************************

*/

#define ALLOC_SIZE 0x8000 // Size of the memory pool in bytes

/*********************************************************************

*

* Static data

*

**********************************************************************

*/

static U32 _aMemBlock[ALLOC_SIZE / 4]; // Memory pool used for

// semi-dynamic allocation.

/*********************************************************************

*

* FS_X_AddDevices

*

* Function description

* This function is called by the FS during FS_Init().

* It is supposed to add all devices, using primarily FS_AddDevice().

*

* Note

* (1) Other API functions may NOT be called, since this function is called

* during initialization. The devices are not yet ready at this point.

*/

void FS_X_AddDevices(void) {

//

// Give the file system some memory to work with.

//

FS_AssignMemory(&_aMemBlock[0], sizeof(_aMemBlock));

//

// Add and configure the RAID5 volume. Volume name: "raid5:0:"

//

FS_AddDevice(&FS_RAID5_Driver);

FS_RAID5_AddDevice(0, &FS_NAND_UNI_Driver, 0, 0);

FS_RAID5_AddDevice(0, &FS_NAND_UNI_Driver, 0, 1000);

FS_RAID5_AddDevice(0, &FS_NAND_UNI_Driver, 0, 2000));

FS_RAID5_SetNumSectors(0, 1000);

//

// Add and configure the driver NAND driver. Volume name: "nand:0:"

//

FS_AddDevice(&FS_NAND_UNI_Driver);

FS_NAND_UNI_SetPhyType(0, &FS_NAND_PHY_ONFI);

FS_NAND_UNI_SetECCHook(0, &FS_NAND_ECC_HW_NULL);

FS_NAND_UNI_SetBlockRange(0, 0, NAND_NUM_BLOCKS);

FS_NAND_ONFI_SetHWType(0, &FS_NAND_HW_K66_SEGGER_emPower);

}